Today we are excited to announce that TriggerMesh Event Pipes can run on DigitalOcean App Platform . This means that we are bringing eventing to DigitalOcean customers.

Rodric Rabbah, Director of Serverless at DigitalOcean said “We are excited to see the new use cases that TriggerMesh event pipes deployed on DigitalOcean's App Platform will unlock for our customers. Event pipes can be consumed with DigitalOcean's serverless products. Event pipes complement web and scheduled event sources that our customers are using for event-based architectures on DigitalOcean.”

On the heels of our Shaker release in December where we introduced our powerful CLI this new functionality allows users to develop locally and then deploy remotely on DigitalOcean.

This workflow from development to production also happens without any Kubernetes. The only requirement is to have Docker running locally to be able to run the TriggerMesh components and then you are ready to deploy to DigitalOcean App Platform.

This is extremely useful to hydrate a data lake based on MongoDB (see our new MongoDB target), run managed Kafka connectors with our Kafka source and sinks or trigger DigitalOcean functions based on Cloud events from AWS, Google, Azure or other SaaS.

The process is quite straightforward.

First create an event Pipe with the TriggerMesh CLI tmctl. Once you are happy with it, stop all the components to avoid consuming events from both your local environment and from your DigitalOcean app.

Then you can dump its declarative representation into a DigitalOcean App Platform specification like so and inspect it:

Finally, deploy the event Pipe using the DigitalOcean CLI doctl like so:

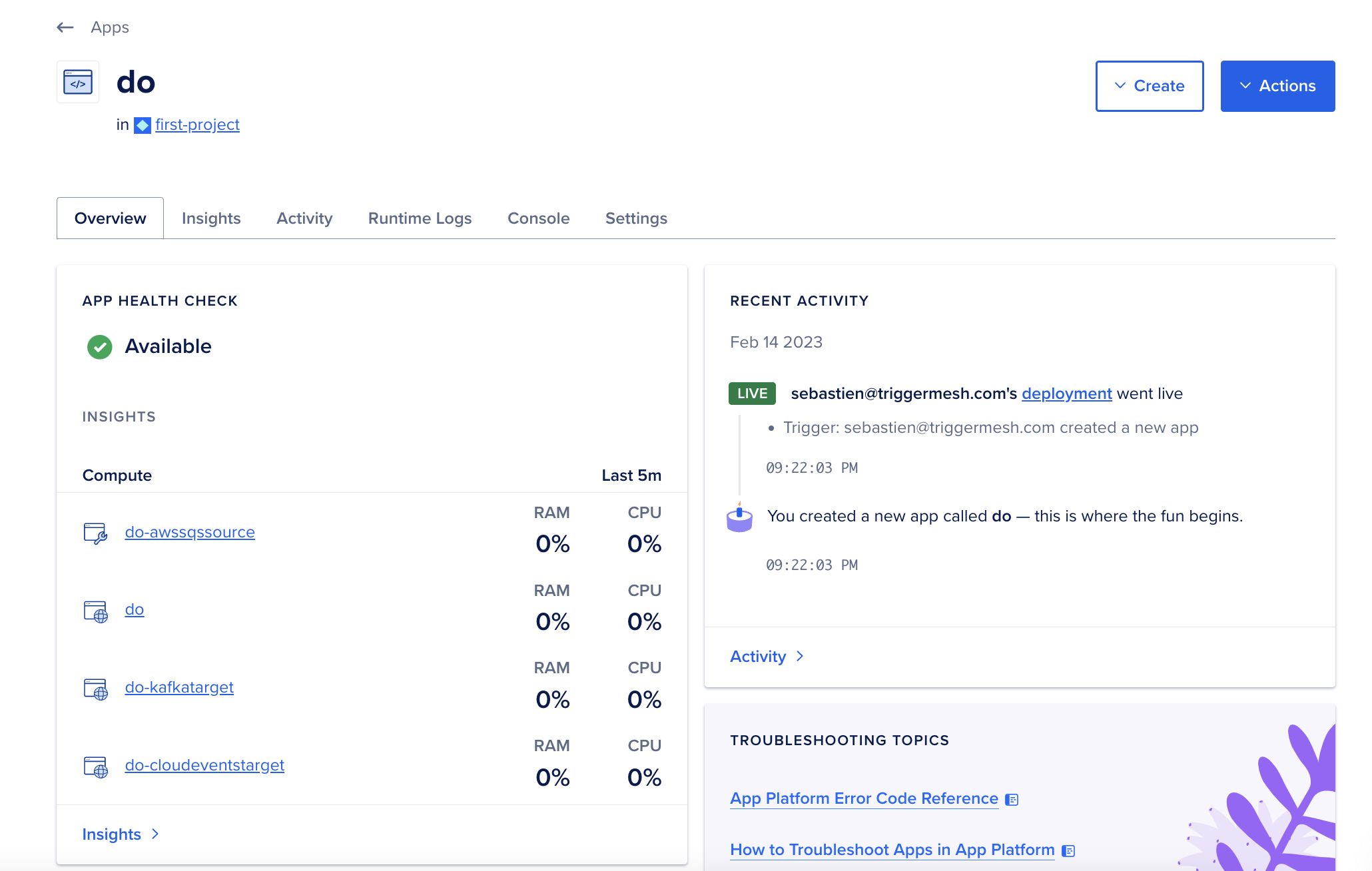

You will see a new App in the DigitalOcean console with each TriggerMesh component running as a container. The snapshot below shows an event Pipe consuming events from AWS SQS and sending the events to both a Kafka cluster and a DO function using the TriggerMesh CloudEvents target.

You will be able to access the runtime logs of each component in the DigitalOcean console as seen in the snapshot below:

Join the TriggerMesh community on Slack and check out our documentation to try it for yourself.

To see all the steps live, watch this 5 minutes screencast:

Enjoy !